Previous blogs examined how the Pilbara model helps identify programs for investment and disinvestment (#5) and illuminates the economic relationships between programs (degrees) and individual courses (subjects). Now we turn to “Program Review” – a deep dive into the specifics of particular programs.

Traditional reviews look at a program’s curricular structure, the institution’s capacity (in terms of faculty, library resources, infrastructure, etc.) to deliver high-quality education in the area, student demand, and in some cases, the delivered quality of teaching. Typically, these types of reviews have not examined a program’s operational detail or economic factors like revenue, cost, and margin, despite the fact that these are important aspects of performance. Now, thanks to the Pilbara model, that can change.

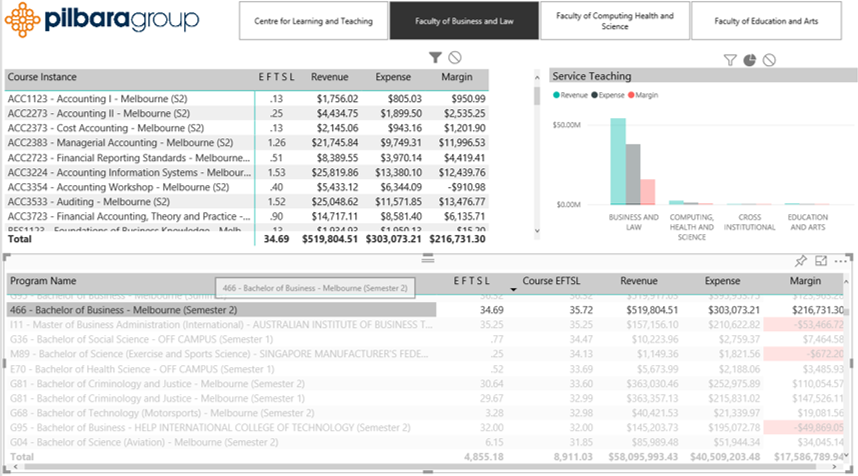

Step 1 is to examine the connections between a program and the courses that underpin it. This can be achieved with a simple dashboard that defines the linkages between courses and programs. Users can click on a course and see the program(s) that the students undertaking that course are registered against, and, more importantly, the revenue, expense and margin of both the course and the overall program(s) as well.

Alternatively, users can click on a program and see the courses that the students in that particular program are undertaking—and, again, the revenue, expense and margin of each course and the overall program. Blog #8 presented these economic results in aggregate form, but now we drill down to the individual courses for each program.

So, what can program reviewers do with this new information? For one thing, they can see yearly and trend data on every program’s cost, revenue, and margin, both in total and on a per-credit hour basis (or per EFTSL in Australia). We described how this works for individual courses in Blog #8, and the linkage information allows these results to be aggregated to the program level. Programs, not courses, represent the university’s face to the market. Without the explicit course-to-program linkages described above, there is a disconnect between data for the “production” side of the institution and data about the marketplace. Cross-referencing the two kinds of data should be a major objective in program review.

The linkages also permit reviewers to analyse the operational and economic detail available at the course level. For example, which courses are the most expensive, and which have the highest or lowest margins? What are the class sizes and teacher profiles, and what delivery methods are used? All this is based on the courses actually taken by students in each program, not on catalogue descriptions that include less-than-precise roadmaps about requirements and electives. For example, by looking at how the curriculum works in practice one can see where particular courses (including electives as well as requirements) and costs that may be disproportionately to their value for the particular degree being studied.

Finally, one can begin to identify courses that present bottlenecks to students’ progress toward their degrees. For example, looking at so-called WFD (withdrawal or a grade of F or D) can signal problems that reviewers might want to look into. The same is true for data on which courses are oversubscribed in particular semesters and locations. The model can easily include such variables if the institution’s data system records them, and thinking about benefits such as the above can provide the motivation needed to maintain the records.

Download the full whitepaper here: www.pilbara.co/whitepaper